RADECS and Research (Pt. 1)

Over the past year or so, I’ve been working with a professor from the University of Saskatchewan and a graduate student to test the reliability of NVIDIA’s GPU chips under radiation. Our paper was selected to an international conference, called RADECS, in Spain and I’ve had the amazing opportunity to be able to travel there in September to present our work. I thought I should dedicate a blog post to discussing the research that we have done and what our findings were.

The entire paper is linked here for reference and includes an abstract, setup, design, findings, and conclusions. I’ll first give an overview and then I’ll dive into how we conducted the experiment.

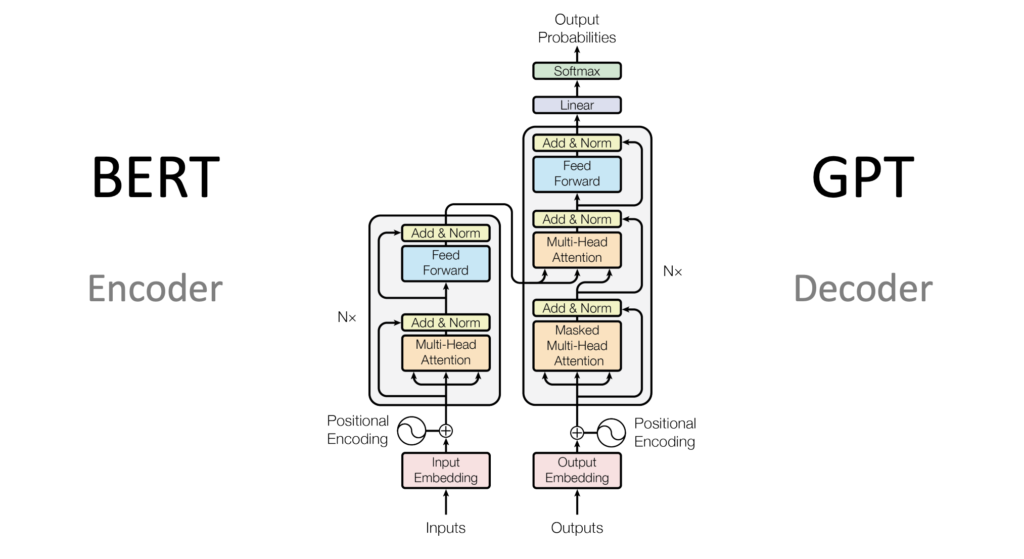

The gist of this experiment was to test how NVIDIA’s Jetson AGX GPU would perform tasks under radiation. The task was to classify movie reviews into either being positive or negative. The GPU uses something called a transformer model, since it is dealing with words and specifically uses the encoder part of the transformer model for classification. Instead of testing multiple reviews, we only tested one review that was on the boundary of negative and positive but should be considered positive (in other words, a review with a score very close to 0.5). We did this because we wanted to see what errors radiation exposure to the GPU would cause, and by using a review so close to the middle, even minor errors would cause the transformer model to potentially misclassify the review and consider it negative.

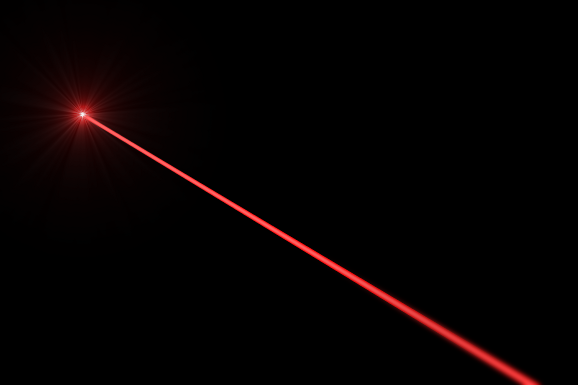

How did we expose the GPU to radiation, you may ask? We put it under a TPA laser (Two Photon Absorption laser), which essentially shoots out high energy photons, capable of accumulating charge in the GPU and causing bits to flip. If enough bits flip, or critical bits flip, it can cause a misclassification of the movie review.

After testing a lot of different strategies to see what metric would help us determine whether there would be a misclassification, we concluded that using L2 distance (also known as Euclidean distance) between 2 outputs was the way to go. In transformer models (or most models in fact), there are many layers. Each layer outputs some value which is then the input for the next layer and so on. Our transformer model had 6 layers, meaning that there would be 6 outputs, although in the end, we only see one output (output of the 6th layer, which was affected by the outputs of the previous 5 layers). In order to try to understand when a critical error, an error that would result in this specific movie review being categorized as false, would occur, we used an approach called DMR (Dual Modular Redundancy).

In this DMR approach, we would run the model on the same movie review twice. After each layer, it would print out its output. Then the output from layer 1 and the output from layer 2 would be compared using the L2 distance formula. If this L2 distance was greater than 1.0, we learned that it would most likely result in a misclassification of the text.

We saw that for about every 50 or so runs of the DMR approach, about 1-2 misclassifications would occur. But we now needed to figure out how to solve that problem. We had 2 options. One option is called TR-TMR (Timing Redundant Triple Modular Redundancy). This approach would essentially run the model 3 times. If 2 or 3 of the outputs were the same, the majority output would be used for the next layer. However, in the case that all outputs are different, the 2 outputs with the shortest L2 distance between them is chosen, their average is taken, and that is the output sent to the next layer. This approach proved to be very accurate, but also very time consuming, so we came up with another approach.

The next approach was a compromise between time taken and also the accuracy of the model. This approach was called HS-TMR (Hybrid Selected Triple Modular Redundancy). In this approach, the model is run twice. Only if the L2 distance between 2 outputs of the same layer is different, the model runs for a third time. The 2 points with the shortest L2 distance are averaged out, and that output is sent to the next layer. By setting a threshold for when the 3rd calculation should occur, we can save time, while also keeping the model fairly accurate.

Our findings show that the TR-TMR was the most reliable, but because it took too much time and was not that much more accurate than HS-TMR, HS-TMR proved to be the best model overall. We were able to analyze the effects of radiation on NVIDIA’s Jetson AGX GPU, concluded that there are severe errors that occur, and found a solution that mitigates these effects while also not being too time consuming.

This has been quite a long blog post, so I’ll leave it there and pick up here next time. I’ll talk about our paper’s acceptance to RADECS and how I’m preparing for this amazing opportunity in the next blog post. Thank you so much for reading and I hope to see you again next time!